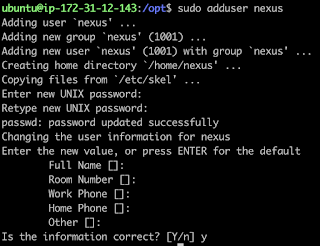

In this article, we are going to use Terraform to setup EKS Clusture.

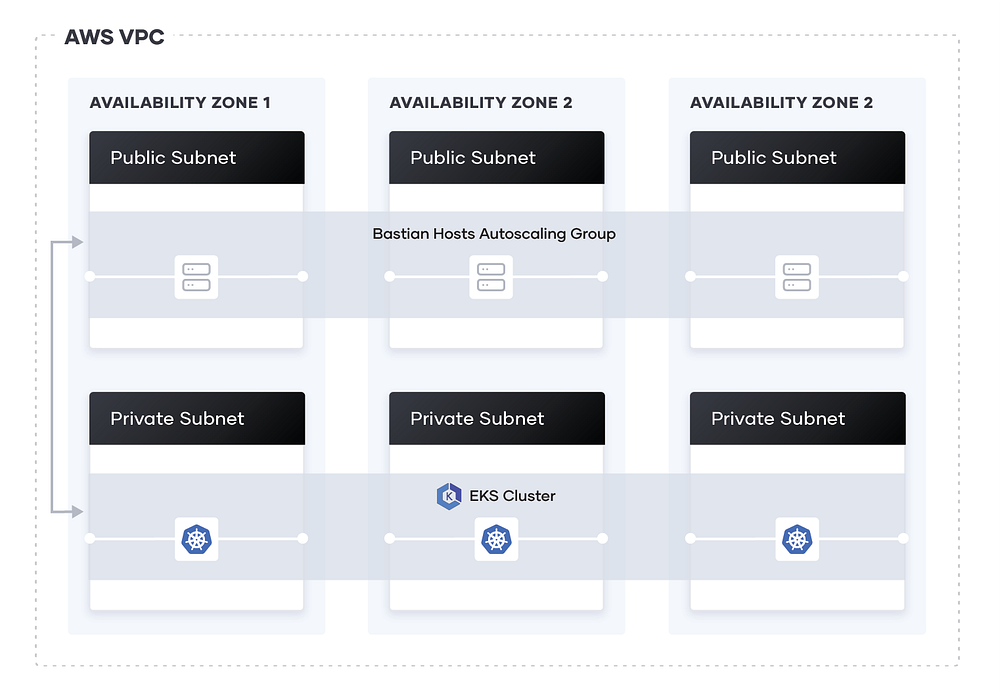

You can see a EKS Clusture setup in above Image.

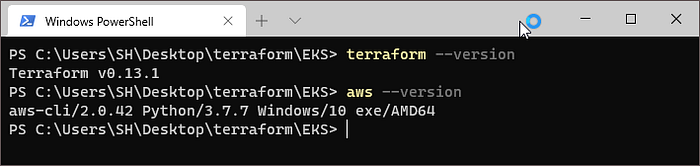

Prerequisite:

1. Install and Configure aws CLI

2. Install terraform

You can follow the instruction in my previous article to Setup and Configure AWS CLI.

If you are using AWS CLI version 1.16.156 or older, then you need to install aws-iam-authenticator

In this EKS enviorment we are going to create following AWS resources:-

1. A VPC (so it doesn’t impact your existing cloud environment and resources) containing three public IPs and three private IPs.

2. Three security group, one for worker-node-group-1 and one for worker-node-group-2 and third security group for common ingress and egress in the same VPC group.

3. Creating EKS Clusture (EKS + 3 Worker Nodes)

Step 1: Creating a new VPC

variable "region" {

default = "ap-south-1"

description = "AWS region"

}provider "aws" {

version = ">= 2.28.1"

region = "ap-south-1"

}data "aws_availability_zones" "available" {}locals {

cluster_name = "training-eks"

}module "vpc" {

source = "terraform-aws-modules/vpc/aws"

version = "2.6.0"name = "training-vpc"

cidr = "10.0.0.0/16"

azs = data.aws_availability_zones.available.names

private_subnets = ["10.0.1.0/24", "10.0.2.0/24", "10.0.3.0/24"]

public_subnets = ["10.0.4.0/24", "10.0.5.0/24", "10.0.6.0/24"]

enable_nat_gateway = true

single_nat_gateway = true

enable_dns_hostnames = truetags = {

"kubernetes.io/cluster/${local.cluster_name}" = "shared"

}public_subnet_tags = {

"kubernetes.io/cluster/${local.cluster_name}" = "shared"

"kubernetes.io/role/elb" = "1"

}private_subnet_tags = {

"kubernetes.io/cluster/${local.cluster_name}" = "shared"

"kubernetes.io/role/internal-elb" = "1"

}

}

As you can see in the code, we create a new VPC with 3 public and 3 private subnets in region ‘ap-south-1’ using VPC module of Terraform.

It will also create single NAT gateway

Step 2: Creating Security Group that will be used by EKS Clusture.

resource "aws_security_group" "worker_group_mgmt_one" {

name_prefix = "worker_group_mgmt_one"

vpc_id = module.vpc.vpc_idingress {

from_port = 22

to_port = 22

protocol = "tcp"cidr_blocks = [

"10.0.0.0/8",

]

}

}resource "aws_security_group" "worker_group_mgmt_two" {

name_prefix = "worker_group_mgmt_two"

vpc_id = module.vpc.vpc_idingress {

from_port = 22

to_port = 22

protocol = "tcp"cidr_blocks = [

"192.168.0.0/16",

]

}

}resource "aws_security_group" "all_worker_mgmt" {

name_prefix = "all_worker_management"

vpc_id = module.vpc.vpc_idingress {

from_port = 22

to_port = 22

protocol = "tcp"cidr_blocks = [

"10.0.0.0/8",

"172.16.0.0/12",

"192.168.0.0/16",

]

}

}

Here we have created 3 Security Group,

- one for worker-group-1,

- one for worker-group-2 and

- last for all worker-groups.

In this we are only opening port 22, if your container/pods need other ports to be opened you can define it here.

Step 3: Creating EKS Clusture

module "eks" {

source = "terraform-aws-modules/eks/aws"

cluster_name = local.cluster_name

subnets = module.vpc.private_subnetstags = {

Environment = "training"

GithubRepo = "terraform-aws-eks"

GithubOrg = "terraform-aws-modules"

}vpc_id = module.vpc.vpc_idworker_groups = [

{

name = "worker-group-1"

instance_type = "t2.small"

additional_userdata = "echo foo bar"

asg_desired_capacity = 2

additional_security_group_ids = [aws_security_group.worker_group_mgmt_one.id]

},

{

name = "worker-group-2"

instance_type = "t2.medium"

additional_userdata = "echo foo bar"

additional_security_group_ids = [aws_security_group.worker_group_mgmt_two.id]

asg_desired_capacity = 1

},

]

}data "aws_eks_cluster" "cluster" {

name = module.eks.cluster_id

}data "aws_eks_cluster_auth" "cluster" {

name = module.eks.cluster_id

}

Using eks module from terraform, we are creating eks-clusture with two worker-groups (auto-scalling group)

- worker-group-1 consisting of two t2.small instance

- worker-group-2 consisting of one t2.meduim instance

Step 4 (Optional): Use kubernetes provider to manage and deploy in EKS.

provider "kubernetes" {

load_config_file = "false"

host = data.aws_eks_cluster.cluster.endpoint

token = data.aws_eks_cluster_auth.cluster.token

cluster_ca_certificate = base64decode(data.aws_eks_cluster.cluster.certificate_authority.0.data)

}You can see kubernetes resource in terraform, to define kubernetes resources in EKS.

Step 5 (optional): Create output script which will output all configuration we have done so far.

output "cluster_endpoint" {

description = "Endpoint for EKS control plane."

value = module.eks.cluster_endpoint

}output "cluster_security_group_id" {

description = "Security group ids attached to the cluster control plane."

value = module.eks.cluster_security_group_id

}output "kubectl_config" {

description = "kubectl config as generated by the module."

value = module.eks.kubeconfig

}output "config_map_aws_auth" {

description = "A kubernetes configuration to authenticate to this EKS cluster."

value = module.eks.config_map_aws_auth

}output "region" {

description = "AWS region"

value = var.region

}output "cluster_name" {

description = "Kubernetes Cluster Name"

value = local.cluster_name

}

It will output following details:-

- clusture-endpoint

- security-group-id

- kubeconfig

- config_map in EKS to authenticate

- aws region

- clusture name

Step 6: Initialize, Plan and Apply Terraform Script

Initialize terraform script:-

$ terraform init

This will download all module and resources from terraform

Plan terraform script:-

$ terraform plan

This will show you all resources which will be created using terraform.

(It won’t create any resource)

Apply terraform script:-

$ terraform apply — auto-aprove

This will create all the resources that we have defined in terraform script.

This will take about 10–15 minutes to deploy all resources.

(warning : “ — auto-aprove” here will auto approve and start creating without confirmation)

Step 7: Install kubectl and Configure kubectl using AWS CLI

Download kubectl from

- “https://kubernetes.io/docs/tasks/tools/install-kubectl/”

$ aws eks --region ap-south-1 update-kubeconfig --name training-eksThis command will update ~/.kube/config file so that we can access EKS Clusture using kubectl.

Hooray you have created you EKS Clusture.

Now you can use kubectl command to run/deploy you application.

(Note: You can use $ terraform outputto get all the configuration again.)

![AWS Terraform [2024]: ECS Cluster on Autoscaling EC2 with RDS DB - a fully CloudNative approach](https://miro.medium.com/v2/resize:fit:679/1*oFw6CENBoDEFt_Yh4L8NmA.png)